AI-Powered E2E Testing with Midscene.js and Playwright

E2E testing without writing selectors. In this post, we'll try using Midscene.js for controlling UI with Natural language commands together with Playwright, the popular framework for automated browser testing.

What is Midscene.js?

Midscene.js is a JavaScript SDK that uses LLM models (such as GPT-4o or Qwen) to interpret our commands, such as:

"Type

art toyand search" "Extract all product names and prices"

And converts them into actual browser interactions without writing any DOM/selector code.

Integrate with Playwright

For those who want to see the full playwright config including the e2e code itself, you can check out the Example Project that midscenejs created https://github.com/web-infra-dev/midscene-example/blob/main/playwright-demo https://midscenejs.com/integrate-with-playwright.html

Here we'll try search chaka on popmart

./e2e/popmart-search.ts

import { expect } from "@playwright/test";

import { test } from "./fixture";

test.beforeEach(async ({ page, aiTap }) => {

page.setViewportSize({ width: 1280, height: 768 });

await page.goto("https://www.popmart.com/th");

await page.waitForLoadState("load");

await aiTap('click the "ยอมรับ" (accept) button for privacy policy at the bottom of the page');

});

test("search chaka on popmart", async ({

ai,

aiQuery,

aiAssert,

aiWaitFor,

aiNumber,

aiBoolean,

aiString,

aiLocate,

}) => {

// 👀 type keywords, perform a search

await ai('type "chaka" in search box located in the top appbar, hit Enter');

// 👀 wait for the loading

await aiWaitFor("there is at least one item item on page");

// 👀 find the items

const items = await aiQuery(

"{itemTitle: string, price: Number}[], find item in list and price"

);

console.log("items", items);

expect(items?.length).toBeGreaterThan(0);

});

Step by step

- Go to https://www.popmart.com/th and wait until complete

- We'll use

aiTapto click the accept button in the cookie section

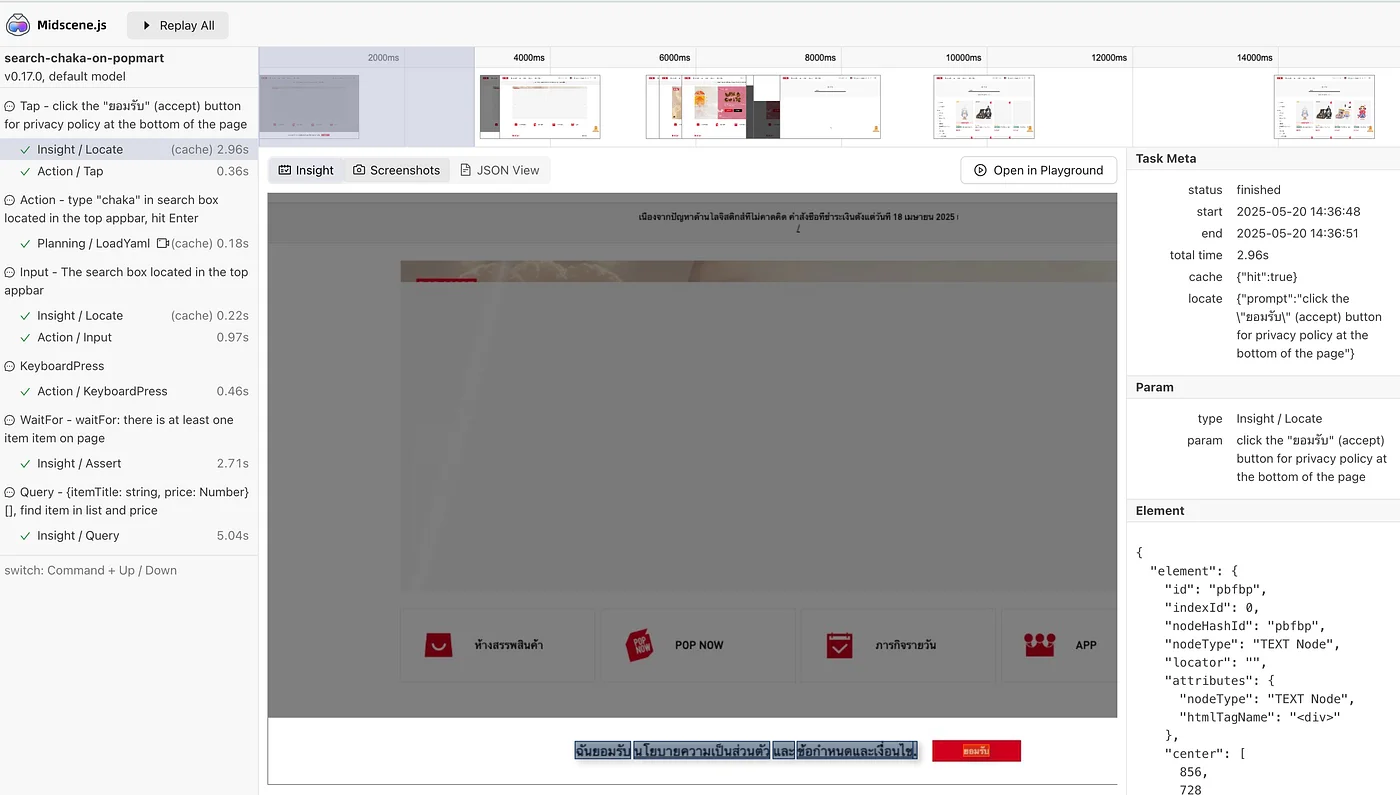

Another advantage of using midscene is that it reports each action visually

ai('type "chaka" in search box located in the top appbar, hit Enter')Tell the AI to find the search box at the top appbar, type chaka and press enter

If you try clicking on the actual website, you'll see it doesn't just click and type in that box but the UI shows a drawer-like element, and it can handle this too

4.await aiWaitFor("there is at least one item item on page")

Wait until items appear

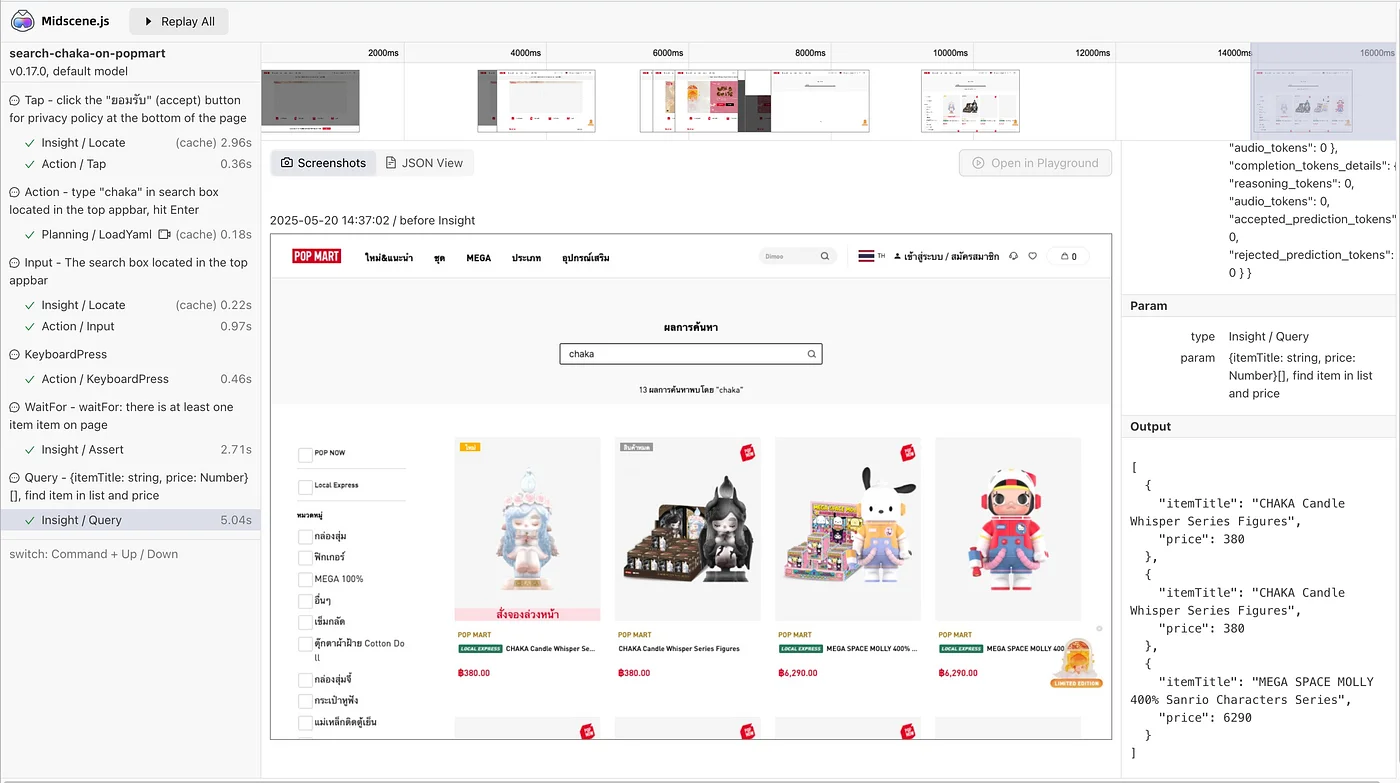

aiQuerywe tell it to help query items and prices{itemTitle: string, price: Number}[], find item in list and price

Expect items to exist. In reality, we could also add a condition that the items must be related to the keyword we're searching for (in this case, Chaka)

But we should note that it's from a visualized perspective as an image, meaning it only sees what's shown on the screen.

items [

{ itemTitle: 'CHAKA Candle Whisper Series Figures', price: 380 },

{ itemTitle: 'CHAKA Candle Whisper Series Figures', price: 380 },

{

itemTitle: 'MEGA SPACE MOLLY 400% Sanrio Characters Series',

price: 6290

}

]

Report

After running, we can view the report for each action, which shows if it's an AI action, how many tokens were used, and how long it took.

Caching

I believe many people will ask "Won't this be expensive and slow if we have to call AI every time?"

Midscene supports caching the planning steps and DOM XPath to reduce calls to the AI model. After the first test run, it will create caching as .yaml

midscene_run/cache/popmart.spec.ts(search-chaka-on-popmart).cache

midsceneVersion: 0.17.0

cacheId: popmart.spec.ts(search-chaka-on-popmart)

caches:

- type: locate

prompt: >-

click the "ยอมรับ" (accept) button for privacy policy at the bottom of the

page

xpaths:

- //*[@id="__next"]/div[1]/div[1]/div[4]/div[1]/div[2]/text()

- type: locate

prompt: The search box in the top appbar

xpaths:

- >-

//*[@id="__next"]/div[1]/div[1]/div[1]/div[1]/div[2]/div[2]/div[1]/div[1]/div[1]/div[1]/div[1]/div[1]/img[1]

- type: plan

prompt: type "chaka" in search box located in the top appbar, hit Enter

yamlWorkflow: |

tasks:

- name: type "chaka" in search box located in the top appbar, hit Enter

flow:

- aiInput: chaka

locate: The search box located in the top appbar

- aiKeyboardPress: Enter

- type: locate

prompt: The search box located in the top appbar

xpaths:

- >-

//*[@id="__next"]/div[1]/div[1]/div[1]/div[1]/div[2]/div[1]/div[1]/text()

This is an example after running the popmart search test. You'll see it has created planning and xpaths.

So the next time if we run with cache, it will run with these commands instead of calling AI.

Except for

aiBoolean,aiQuery,aiAssertwhich won't be cached because they're queries, not actions.

AI Model

Since midscenejs is an open-source project separate from any cloud provider or model, we can choose to deploy either publicly or privately.

Advantages of Using AI for E2E Testing Compared to Traditional Testing

- Understands UI without being tied to DOM selectors

- If UI changes element type (e.g.

<button>→<a>), traditional test scripts will break - But AI looks at UI context like text, color, position instead Or sometimes expects is it a circle? is it red?

- Easy communication with non-dev team members

- PM, Designer can read tests

- Or write basic prompts themselves without knowing JavaScript

- For example "open settings page and check if there's a toggle for dark mode"

-

Suitable for testing business logic / high-level scenarios

-

Easier to write tests for dynamic UI

- For example "click confirm and there should be a success dialog at the top right corner"

- Traditional tests might need many branches, but AI understands context better

However, we still shouldn't combine all AI actions into one command. We should still separate actions clearly because it's a Test and we don't want non-deterministic behavior each time when running in test systems https://midscenejs.com/blog-programming-practice-using-structured-api.html

Conclusion

In my view, using AI-based testing is like having an "assistant that understands UI" to help write tests — it doesn't replace everything and we need to understand this aspect too. But it helps solve problems in real user interaction flows. We can think of it from a visualized perspective, like when a user does this, this should happen on screen. Sometimes catching elements might be very difficult, but from an actual user's perspective, it might be very simple indeed.